Phishing attacks have become so commonplace that something new had to come along to up the game. A significant concern in cybersecurity for more than 20 years, phishing tactics are shared widely and dissected in blogs and other professional exchanges among security experts, and as a result, there is comprehensive knowledge on the subject. This blog will discuss the evolution of phishing into AI-based phishing and examine the measures—like AI phishing detection—that security professionals are taking to address this threat.

What is phishing and why does it matter?

Phishing is what bad actors do with fake e-mails designed to trick recipients into clicking dangerous links, downloading malicious files, or providing sensitive information. Hackers like to target businesses because the payoff can be large for a talented and persistent hacker.

Not so long ago, the advice for outsmarting phishing tactics boiled down to this:

- Hover over and inspect the sender’s address: if it reads as random characters or a misspelled domain (e.g., xj_221tbl@autozonne.com), that is a big clue that it is fake.

- Hover over the link to verify that it goes where it says and not to a sketchy-looking URL (e.g., autozzonne.com)

- Remember what your parents taught you: if the offer looks too good to be true, it probably is!

- Watch for bad grammar or obvious spelling mistakes.

Naturally, attackers keep busy looking for ways to get around this advice. It is a continuous game where each stays one step ahead of the other: defenders execute tactics to stop attacks, and attackers work out how to defeat the defenders’ tactics. It matters because it always works: an attacker can depend on at least one person to take the bait.

And in the new age of artificial intelligence, this strategy has evolved in speed and complexity thanks to AI-generated phishing messages; fortunately, AI phishing detection is evolving at a similar pace.

What has worked in the past to defeat phishing?

To more efficiently detect the indicators described above, the security industry created tools that put them that proverbial step ahead. These tools filter out suspect sending domains, block certain attachments, and open links in virtual machines to protect recipients from malicious content.

The industry also improved its security and awareness training by developing high-quality content designed to teach people how to identify phishing attacks. Many organizations mandate that all employees complete this kind of training annually, or even more frequently.

Subsequently, attackers advanced their phishing methods by employing new tactics and techniques, such as registering domains that closely mimic legitimate ones, utilizing expired domains instead of newly created ones, and developing more sophisticated evasion strategies to increase the likelihood of their links and attachments bypassing defenses. In response, the security industry evolved requisite controls and training resources, thus perpetuating this ongoing cycle.

Then Generative AI (GenAI) enters the picture and—voila!—attackers gained a new and powerful tool to create more effective phishing content more quickly. Consequently, security experts went on the defensive, developing AI phishing detection tools with the latest technology.

Read more: Strengthening security in an AI-driven world

How effective are these AI-enhanced phishing tactics?

According to recent research from Bruce Schneier et al., attackers who use LLMs or GenAI solutions in their phishing campaigns experience a 60% success rate and a 95% or more cost savings for their overall attack strategy.

Obviously, AI makes it much easier and cheaper to create successful phishing campaigns!

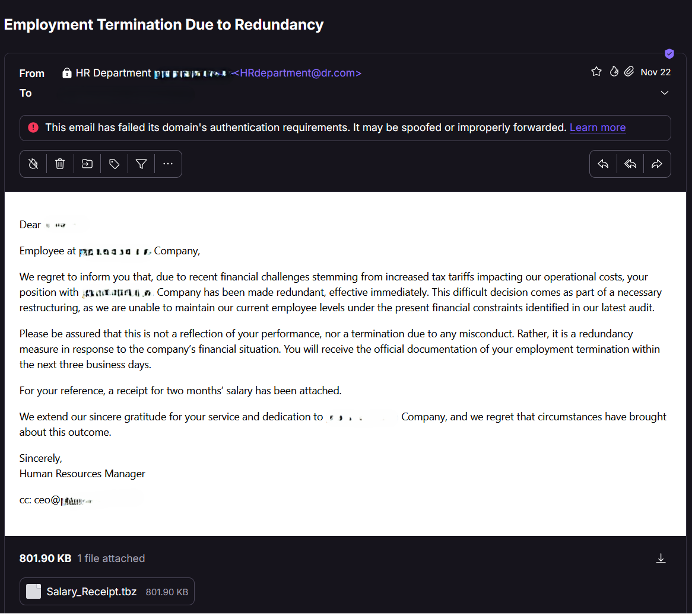

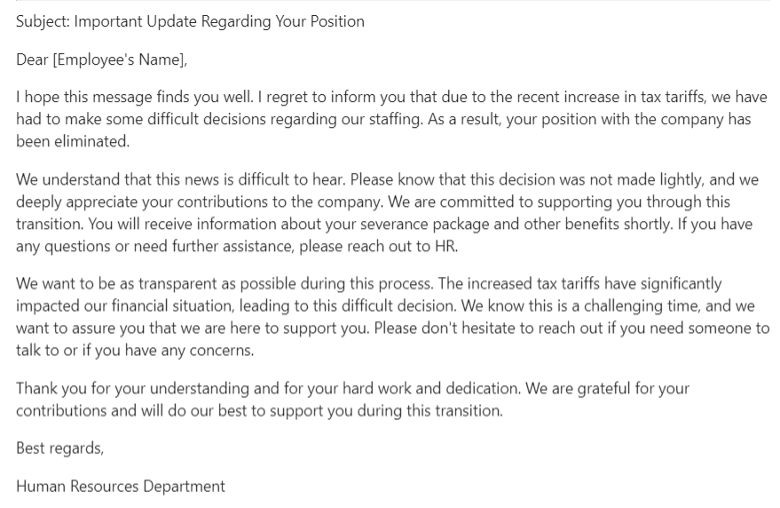

Look at the screenshot of this spam message that ended up in my spam folder recently. I suspected that it was generated with LLM because of how the content was written and presented.

While there are clues that it is a phishing e-mail, the content is otherwise pretty convincing. Besides sounding like a message any business might send to its employees, the language has enough emotional weight to elicit the kind of psychological response an attacker is looking to exploit: “I’m being laid off!? What? And what is up with this receipt?!?”

After not falling for the message (it was in my junk e-mail folder, after all), I thought that it did read as if ChatGPT could have written it, so I attempted to generate a similar message with another LLM, Microsoft Copilot. With just five prompts to fine tune the details, I ended up with this:

Here are the specific prompts I used in order:

- Can you help me draft an e-mail letting a person with our company know that their position has been eliminated due to increased tax tariffs?

- How should I address the employee’s concerns?

- Can you provide examples of empathetic responses?

- Can you help me draft a specific message using these examples?

- Can you sign it with a more appropriate message from the HR department?

I wrote prompts 1 and 5 myself and Copilot suggested prompts 2-4. In just a few minutes, Copilot had generated a convincing phishing e-mail that could have just as easily been the content in the e-mail in the first example above.

From there, it is simply a matter of registering a domain, generating a payload, and then sending the e-mail to my targets. Specific programmatic guardrails prevent ChatGPT, Claude, or Copilot from automating all of that,but there are existing models that do not have the same controls. In addition to sending those e-mails, these models can be convinced to generate malicious payloads and landing pages and delivery mechanisms (you won’t find links to these in this blog for safety reasons, but they are not difficult to find).

Watch: AI-powered secrets to outsmart cyber criminals

AI phishing detection keeps the good guys ahead

These AI-enhanced phishing tactics sound “impressive” and even more than a step ahead of what the security industry is prepared for, you can ensure that your organization is ready to defend against this newer, cost-effective strategy. In addition to what every organization should already be doing—multi-factor authentication, anti-phishing protection technologies, and effective patch management—you can implement:

- Relevant, regular security and awareness training. Run scenarios with LLM-generated content in a safe manner to prepare your employees before a real phishing attack takes a bite out of your organization!

- AI-driven security tools. Ensure that you are using the same tooling as the bad actors. Seek a reputable vendor with a reputation for their expertise and tools!

- Frequent, regular security assessments. Assess your security program and applications on a regular basis to identify vulnerabilities and get your defensive measures up to date. Our assessments team can deploy AI-enhanced offensive tools to conduct custom assessments of your organization and then design specific recommendations to remediate any issues that we identify!

While full of promise, the digital age is also full of pitfalls like phishing and other tactics to exploit the unwary. Fortunately, security professionals relentlessly stay on top of what malicious actors are doing with tools like AI phishing detection to protect businesses and individuals alike.

Read more: New frontiers in security: the rise of Zero Trust and AI endpoint tools